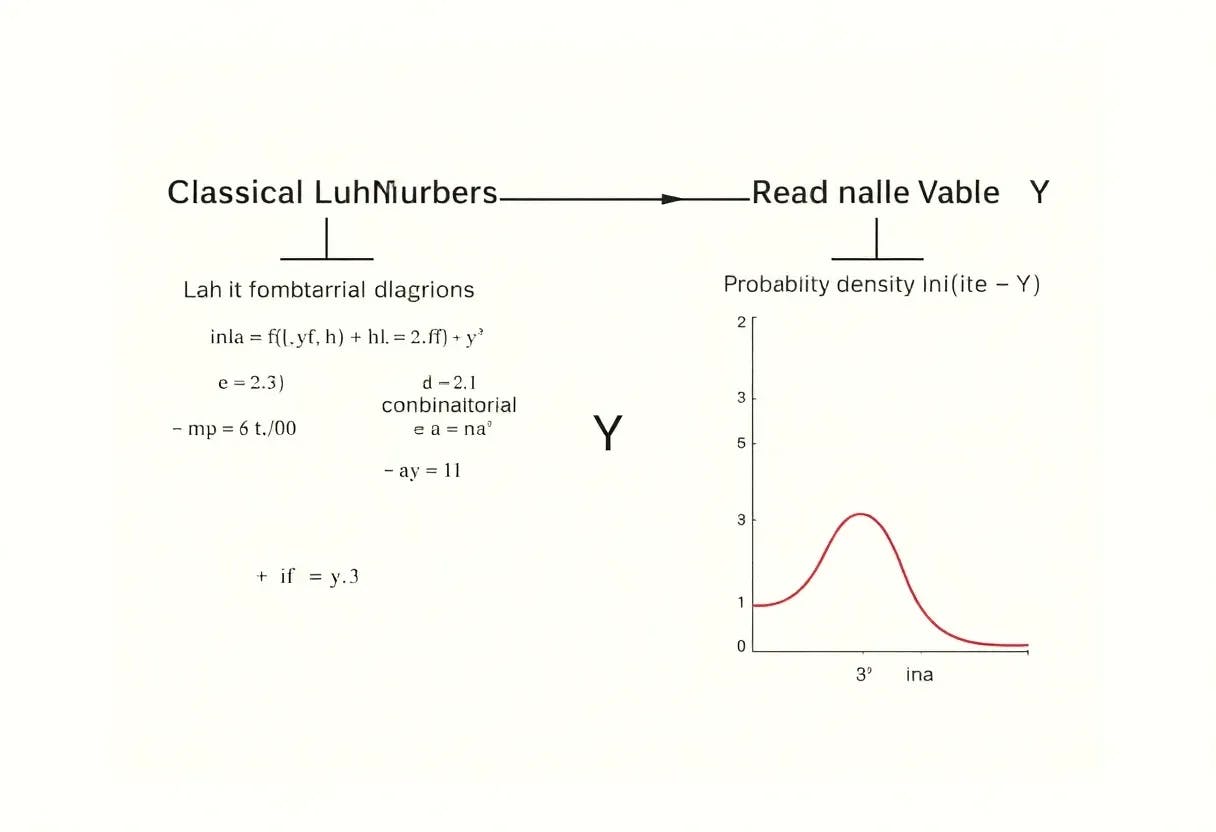

Researchers Blend Probability and Pattern Theory in New Study

14 Oct 2025

Researchers explore how randomness can form patterns by combining probability with Lah and Bell numbers in a new mathematical study.

Why “Classical Excess” Could Be the Next Big Tool in Quantum Research

24 Sept 2025

New research defines a resource theory of generalized contextuality in GPT systems, introducing “classical excess” as a key measure.

Why Simplex Embeddability Matters in Understanding GPTs

24 Sept 2025

Explores contextuality in GPT systems through univalent simulations, simplex embeddability, and resource theories in quantum research.

Does Quantum Theory Hide a Secret Heat Signature?

23 Sept 2025

Explores how fine-tuning in quantum contextuality could be explained by information erasure, entropy, and deeper fundamental theories.

Contextuality 101: What “POM Success Probability” Means for AI

23 Sept 2025

Discover how scientists rank GPT systems using contextuality, resource theory, and the POM game as a measure.

The Math Behind GPT Systems and Their Ontological Models

23 Sept 2025

Explore how GPT systems are defined, simulated, and embedded through operational theory, contextuality, and univalent simulations.

A New Hierarchy of Contextuality in Quantum Foundations

23 Sept 2025

Discover a new hierarchy of contextuality that measures how theories depart from classical logic in quantum foundations.

Understanding Contextuality in General Probabilistic Theories

23 Sept 2025

Explore contextuality in General Probabilistic Theories, from qubits to GPT systems, and why it matters in quantum foundations.

educe Truth Bias and Speed Up Unfolding with Moment‑Conditioned Diffusion

7 Sept 2025

This article presents a cDDPM that conditions on detector data to sample P(x|y), enabling multidimensional unfolding and generalization.